If AI will play an essential role in your application, then consider using a self-hosted, open source model instead of a proprietary and externally hosted one. In this post we explore some of the risks for the latter option. We’re Fly.io. We put your code into lightweight microVMs on our own hardware around the world. Check us out—your app can be deployed in minutes.

The topic of “AI” gets a lot of attention and press. Coverage ranges from apocalyptic warnings to Utopian predictions. The truth, as always, is likely somewhere in the middle. As developers, we are the ones that either imagine ways that AI can be used to enhance our products or the ones doing the herculean tasks of implementing it inside our companies.

I believe the following statement to be true:

AI won’t replace humans — but humans with AI will replace humans without AI.

I believe this can be extended to many products and services and the companies that create them. Let’s express it this way:

AI won’t replace businesses — but businesses with AI will replace businesses without AI.

Today I’m assuming your business would benefit from using AI. Or, at the very least, your C-levels have decreed from on high that thou must integrateth with AI. With that out of the way, the next question is how you’re meant to do it. This post is an argument to build on top of open source language models instead of closed models that you rent access to. We’ll take a look at what convinced me.

But OpenAI is the market leader…

OpenAI, the creators of the famous ChatGPT, are the strong market leaders in this category. Why wouldn’t you want to use the best in the business?

Early on, stories of private corporate documents being uploaded by employees and then finding that private information leaking out to general ChatGPT users was a real black eye. Companies began banning employees from using ChatGPT for work. It exposed that people’s interactions with ChatGPT were being used as training data for future versions of the model.

In response, OpenAI recently announced an Enterprise offering promising that no Enterprise customer data is used for training.

With the top objection addressed, it should be smooth sailing for wide adoption, right?

Not so fast.

While an Enterprise offering may address that concern, there are other subtle reasons to not use OpenAI, or other closed models, that can’t be resolved by vague statements of enterprise privacy.

What are the risks for building on top of OpenAI?

Let’s briefly outline the risks we take on when relying on a company like OpenAI for critical AI features in our applications.

- Single provider risk: Relying deeply on an external service that plays a critical role in our business is risky. The integration is not easily swapped out for another service if needed. Additionally, we don’t want part of our “secret sauce” to actually be another company’s product. That’s some seriously shaky ground! They want to sell the same thing to our competitors too.

- Regulation or Policy change risk: “AI” is being talked about a lot in politics. What’s acceptable today may be deemed “not allowed” in the future and a corporation providing a newly regulated service must comply.

- Financial risk: AI chatbots lose money on every chat. If the financial models that make our business profitable are built on impossible to maintain prices, then our business model may be at risk when it’s time to “make the AI engine profitable” like we’ve seen happen time and time again with every industry from cookware to video games. What might the true cost be? We don’t know. ‘Nuff said.

- Governance and leadership risk: The co-founder and CEO of OpenAI, Sam Altman, was forced out of his own company by a coup from his board. This was later resolved with both Sam Altman and Greg Brockman returning. This exposes another risk we don’t often consider with our providers. More on this later.

Let’s look a bit closer at the “Single provider risk”.

Single provider risk

For hobby usage, proof of concept work, and personal experiments, by all means, use ChatGPT! I do and I expect to continue to as well. It’s fantastic for prototyping, it’s trivial to set up, and it allows you to throw ink on canvas so much more quickly than any other option out there.

Up until recently, I was all gung-ho for ChatGPT being integrated into my apps. What happened? November 2023 happened. It was a very bad month for OpenAI.

I created a Personal AI Fitness Trainer powered by ChatGPT and on the morning of November 8th, I asked my personal trainer about the workout for the day and it failed. OpenAI was having a bad day with an outage.

I don’t fault someone for having a bad day. At some point, downtime happens to the best of us. And given enough time, it happens to all of us. But when possible, I want to prevent someone else’s bad day from becoming my bad day too.

Evaluating a critical dependency

In my case, my personal fitness trainer being unavailable was a minor inconvenience, but I managed. However, it gave me pause. If I had built an AI fitness trainer as a service, that outage would be a much bigger deal and there would be nothing I could have done to fix it until the ChatGPT API came back up.

With services like a Personal AI Fitness Trainer, the AI component is the primary focus and main value proposition of the app. That’s pretty darn critical! If that AI service is interrupted, significantly altered (say, by the model suddenly refusing my requests for fitness information in ways that worked before) or my desired usage is denied (without warning or reason), the application is useless. That’s an existential threat that could make my app evaporate overnight without warning.

This highlights the risk of having a critical dependency on an external service.

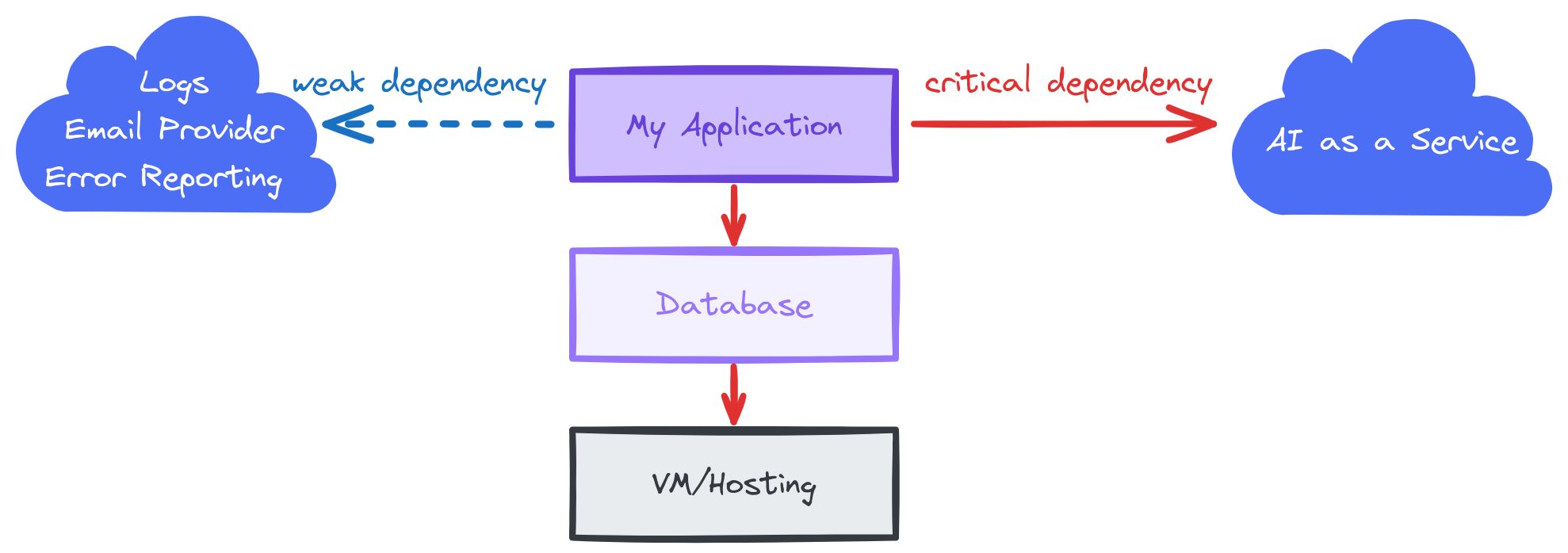

Modern applications depend on many services, both internal and external. But how critical that dependency is matters.

Let’s take a very simple application as an example. The application has a critical dependency on the database and both the app and database have a critical dependency on the underlying VMs/machines/provider. These critical dependencies are so common that we seldom think about them because we deal with them every day we come to work. It’s just how things are.

The danger comes when we draw a critical dependency line to an external service. If the service has a hiccup or the network between my app and their service starts dropping all my packets, the entire application goes down. Someone else’s bad day gets spread around when that happens. 😞

In order to protect ourselves from a risk like that, we should diversify our reliance away from a single external provider. How do we do that? We’ll come back to this later.

We are not without dependencies

It’s really common for apps to have external dependencies. The question is how critical to our service are those dependencies?

What happens to the application when the external log aggregation service, email service, and error reporting services are all unreachable? If the app is designed well, then users may have a slightly degraded experience or, best case, the users won’t even notice the issues at all!

The key factor is these external services are not essential to our application functioning.

Regulation or Policy change risk

Our industry has a lot of misconceptions, fear, uncertainty, and doubt around the idea of regulation, but sometimes it’s justified. I don’t want you to think about regulation as a scary thing that yanks away control. Instead, let’s think about regulation as when a government body gets involved to disallow businesses from doing or engaging in specific activities. Given that our industry has been so self-defined for so long, this feels like an existential threat. However, this is a good thing when we think about vehicle safety standards (you don’t want your 4-ton mass of metal exploding while traveling at 70 mph), pollution, health risks, and more. It’s a careful balance.

Ironically, Sam Altman has been a major proponent for government regulation of the AI industry. Why would he want that?

It turns out that regulation can also be used as a form of protectionism. Or, put another way, when the people with an early lead see that they aren’t defensible against advances with open source AI models, they want to pull up the ladders behind them and have the government make it legally harder, or impossible, for competitors to catch up to them.

If Altman’s efforts are successful, then companies who create AI can expect government involvement and oversight. Added licensing requirements and certifications would raise the cost of starting a competing business.

At this point you may be thinking something like “but all of that is theoretical Mark, how would this affect my business’ use of AI today?”

Introducing an external organization that can dictate changes to an AI product risks breaking an existing company’s applications or significantly reducing the effectiveness of the application. And those changes may come without notice or warning.

Additionally, if my business is built on an external AI system protected from competition by regulators, that adds a significant risk. If they are now the only game in town, they can set whatever price they want.

Governance and leadership risk

In the week following the OpenAI outage (November 17th to be precise), the entire tech industry was upended for most of a week following a blog post on the OpenAI blog announcing that the OpenAI board fired the co-founder and CEO, Sam Altman. Then Greg Brockman, co-founder and acting President resigned in protest.

OpenAI is partnered with Microsoft and on Nov 20, 2023, Satya Nadella (CEO of Microsoft) posted the following on X (formerly Twitter):

We remain committed to our partnership with OpenAI (OAI) and have confidence in our product roadmap, our ability to continue to innovate with everything we announced at Microsoft Ignite, and in continuing to support our customers and partners. We look forward to getting to know Emmett Shear and OAI’s new leadership team and working with them. And we’re extremely excited to share the news that Sam Altman and Greg Brockman, together with colleagues, will be joining Microsoft to lead a new advanced AI research team. We look forward to moving quickly to provide them with the resources needed for their success.

Microsoft nearly acqui-hired OpenAI for $0! That’s some serious business Jujutsu.

In the end, after 12 days of very public corporate chaos, Sam Altman and Greg Brockman returned to OpenAI at their previous leadership positions as if nothing happened (save the firing of the rest of the board).

With all the drama and uncertainty resolved, you may say, “it all worked out in the end, right? So what’s the problem?”

This highlights the risk of building any critical business system on a product offered and hosted by an external company. When we do that, we implicitly take on all of that company’s risks in addition to the risks our business already has! In this case, it’s taking on all the risks of OpenAI while getting none of their financial benefits!

What’s the alternative?

The thing big AI providers like OpenAI and Google seem to fear most is competition from open source AI models. And they should be afraid. Open source AI models continue to develop at a rapid pace (there’s huge incremental improvements on a weekly basis) and, most importantly, they can be self-hosted.

Additionally, it’s not out of reach for us to fine tune a general model to better fit our needs by adding and removing capabilities rather than hope that the capabilities we need suddenly manifest for us.

Doesn’t this all sound like the classic argument in favor of open source?

If we have the model and can host it ourselves, no one can take it away. When we self-host it, we are protected from:

- service interruptions from an external provider for a critical system

- changes in licensing or usage fees (such as your provider suddenly doubling inference costs without warning via an email sent at 3AM)

- government regulators dictate a change to the model that negatively affects our use case (assuming our use isn’t breaking the law of course)

- company policy changes that change the behavior of the model we rely on

- rogue boards or a leadership crisis that impacts a provider

Using an open source and self-hosted model insulates us from these external risks.

I still need GPUs!

Getting dedicated access to a GPU is more expensive than renting limited time on OpenAI’s servers. That’s why a hobby or personal project is better off paying for the brief bits of time when needed.

But let’s face it.

If you really want to integrate AI into your business, you need to host your own models. You can’t control third party privacy policies, but you can control your own policies when you are the one doing your own inference with your own models. Ideally this means getting your own GPUs and incurring the capital expenditure and operations expenditures, but thankfully we’re in the future. We have the cloud now. There’s many options you can use for renting GPU access from other companies. This is supported in the big clouds as well as Fly.io. You can check out our GPU offerings here.

Fly.io also offer GPUs

Running inference on your own hosted models can help de-risk critical AI integrations.

GPU resource prices

Closing thoughts

It’s important to take advantage of AI in our applications so we can reap the benefits. It can give us an important edge in the market! However, we should be extra cautious of building any critical features on a product offered by a proprietary external business. Others are considering the risks of building on OpenAI as well.

Your specific level of risk depends on how central the AI aspect is to your business. If it’s a central component like in my Personal AI Fitness Trainer, then I risk losing all my customers and even the company if any of the above mentioned risk factors happen to my AI provider. That’s an existential risk that I can’t do anything about without taking emergency heroic efforts.

If the AI is sprinkled around the edges of the business, then suddenly losing it won’t kill the company. However, if the AI isn’t being well utilized, then the business may be at risk to competitors who place a bigger bet and take a bigger swing with AI.

Oh, what interesting times we live in! 🙃