We’re Fly.io. We run apps for our users on hardware we host around the world. This post is about fast launching a machine to run a JavaScript function as an alternative to a Lambda service. It’s easy to get started! with your JS app on Fly.io!

A couple of months ago, Chris McCord, the creator of the Phoenix framework, published an article about a new pattern called FLAME as an alternative to serverless computing. The original article focused on the implementation in Elixir, which has some unique clustering capabilities that make it exceptionally well-suited for the FLAME pattern, and this had some folks asking – what would FLAME look like in other languages?

Today we will explore some possible approaches to FLAME in JavaScript. These solutions are experimental and, as you’ll see, not without trade-offs, but let’s see how far we get!

What is FLAME?

As a quick recap, FLAME stands for Fleeting Lambda Application for Modular Execution. It auto-scales tasks simply by wrapping any existing code in a function and having that block of code run in a temporary copy of the app.

In JavaScript, it might look something like this:

function doSomeWork(id) { ... }

// run `doSomeWork` on another machine

export default flame(doSomeWork, ...)

FLAME removes the FaaS labyrinth of complexity and is cloud-provider agnostic. While the Elixir library that Chris wrote is designed specifically for spinning up Machines on Fly.io, FLAME is a pattern that can be used on any cloud that provides an API for spinning up instances of your application.

For a more thorough rundown of FLAME, be sure to read Chris’s full article here: FLAME: Rethinking Serverless

What are Machines on Fly.io?

Fly Machines are the engine of the Fly.io platform: fast-launching VMs that can be started and stopped at subsecond speeds. Fly Machines themselves are not containers, but they do run your application code inside containers. You can read more about them here, but for this article, all you need to know is that Machines are just instances of your application code.

FLAME in vanilla, no-build JavaScript

As mentioned earlier, Elixir is uniquely suited to FLAME because of it’s clustering abilities. The main question for implementing this pattern in other languages is this: how do you run specific code blocks on an entirely different instance of your app?

In this post, we’re pulling back the curtain on the inner workings so you, as the developer, can feel more confident about using it in your app, but once you have a working FLAME library, you should just be able to drop it into your project and start making FLAME calls.

Overview

My teammate, Lubien, wrote a sample JavaScript app that implements FLAME a little differently than how it’s done in Elixir. You can find the complete files at https://github.com/fly-apps/fly-run-this-function-on-another-machine

So, how does it work?

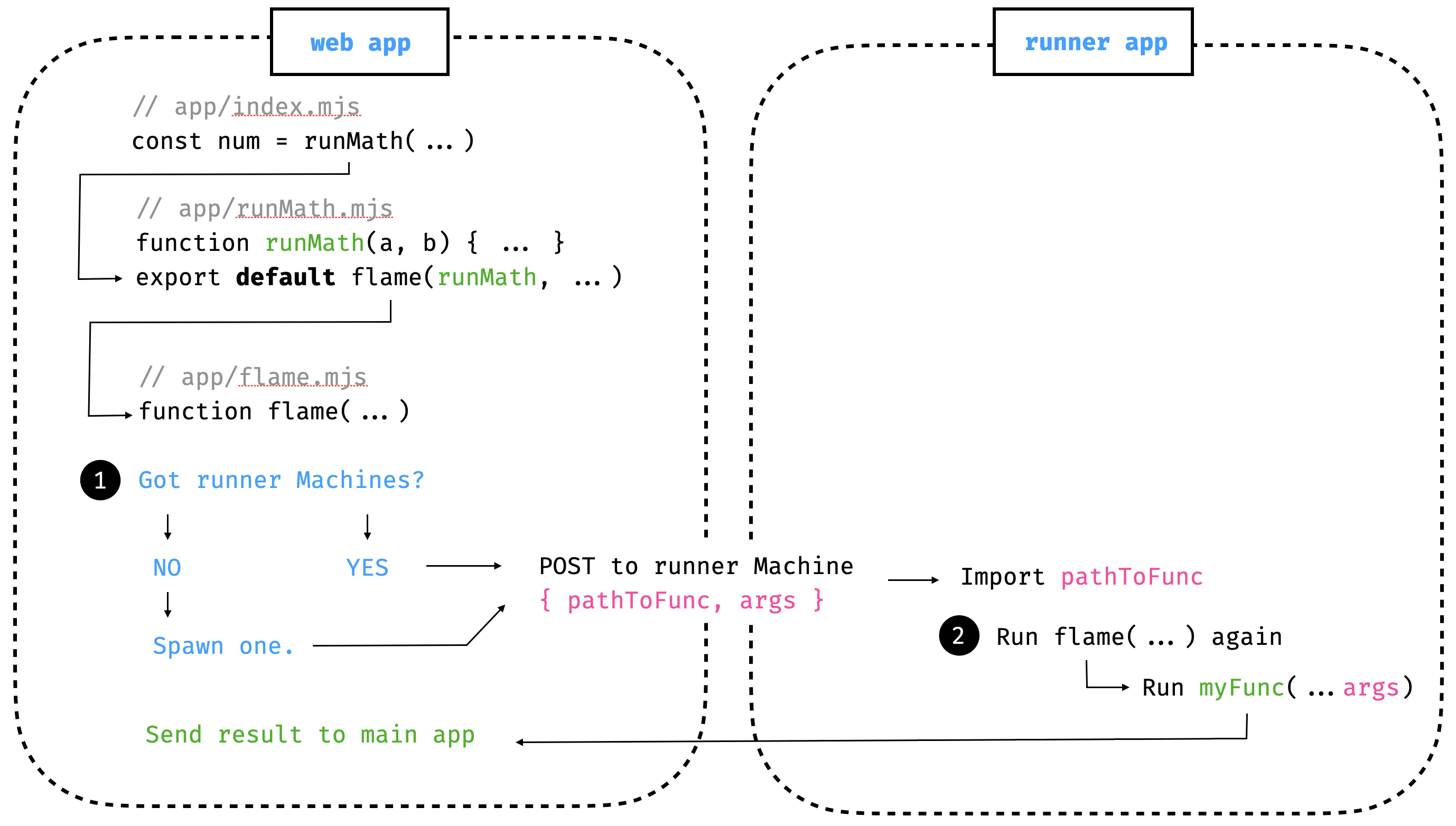

Whenever we want to run a task on another Machine, we pass the code as a parameter to our FLAME function, which punts it off to be run on the other Machine, which we will call our runner Machine.

An important thing to understand is that this FLAME call is always executed twice, and it will return one of two functions:

The first time it’s run on our normal app instance and returns an anonymous function that…

- First checks for existing

runnerMachines and spawns one if none are found.runnerMachines are copies of our application code, but replace our starting process with a simple HTTP server. - Then, it makes a

POSTrequest to thatrunnerMachine, with two parameters:filepath– the absolute path to the file containing our FLAME-wrapped codeargs– any arguments needed for the code inside your FLAME function

The second time it’s run will be on our runner Machine, and this time our original code block will be returned, to be executed with the args we passed in via our POST request.

With that overview out of the way, let’s dive into Lubien’s code.

The Files

In Lubien’s app, there are three files involved in pulling off our FLAME implementation:

index.mjs– This is where our application starts, and it calls the function containing our FLAME-wrapped code,runMathrunMath.mjs– This is where we importrunMathfrom, and exports the returned value of our FLAME function. Unlike the Elixir implementation, all FLAME-wrapped tasks need to be exported as thedefaultmodule in a given file.flame.mjs– This is our FLAME library. This code is specific to spinning up Fly Machines, but again, this could just as easily be swapped for another cloud provider API.

Let’s open up each file and see how the magic works. ✨

index.mjs

Lubien’s FLAME app starts in ./index.mjs, which runs a single function, runMath:

// index.mjs

import runMath from './runMath.mjs'

async function main() {

const math = await runMath(100, 20)

console.log(math)

}

main()

runMath.mjs

Inside of./runMath.mjs we find the following:

// runMath.mjs

import flame from "./flame.mjs"

export default flame((a, b) => {

return a + b

}, {

path: import.meta.url,

guest: {

cpu_kind: "shared",

cpus: 2,

memory_mb: 1024

}

})

This file doesn’t actually contain a function called runMath, but instead exports whatever flame returns. flame is roughly the equivalent of the Elixir FLAME.call method, and it returns a function.

flame accepts the following parameters:

originalFunc: The function you want to execute on another Machine; In this example, we’ve passed it as an anonymous function.config:path: the path to the current file. This is what we’ll pass to ourrunnerserver as thefilepathguest: the specs for the Machine you want to spin up

flame.mjs

Next, if we look inside ./flame.mjs we find a much longer file. As stated before, this is our FLAME library. This does a number of things, including code that…

- Checks to see if there are any existing

runnerMachines - Spawns a new

runnerMachine if none are found - Boots up our simple HTTP server on our

runnerMachines - Schedules timeouts for our

runnerMachines to shut down - In a

POSTrequest, imports & runs our FLAME code block

Rather than step through every line in this file, we’re just going to focus on the flame function, but you can check out the commented documentation here: https://github.com/fly-apps/fly-run-this-function-on-another-machine/blob/main/flame.mjs

Let’s skip down to where flame is actually defined:

// ./flame.mjs

...

export default function flame(originalFunc, config) {

if (IS_RUNNER) {

return originalFunc

}

const {

meta,

guest = {

cpu_kind: "shared",

cpus: 1,

memory_mb: 256

}

} = config

const filename = url.fileURLToPath(config.path);

return async function (...args) {

if (!(await checkIfThereAreWorkers())) {

await spawnAnotherMachine(guest)

}

return await execOnMachine(filepath, args)

}

}

...

As mentioned before, our flame function is always called twice. Above we can see that it will either return our original function that we passed in, OR it will return an anonymous function that checks for runner Machines and sends a request to one.

Further up the file, we define how to handle POST requests on our runner Machine. This is where we import the module from the file that contains our FLAME call. This is the second time flame is called, and this time it returns our original function, which gets executed with the args passed to it.

// ./flame.mjs

...

if (IS_RUNNER) {

...

// get the request params `filepath` and `args`

const { filename, args } = JSON.parse(body)

// import the default module inside `filepath`

// in this case, filepath = "./runMath.mjs"

const mod = await import(filename)

// run the default module (a function) and pass in `args`

const result = await mod.default(...args)

...

}

...

And that’s it! FLAME implemented in vanilla JavaScript.

Understanding how this FLAME pattern works is very helpful, and once you have your FLAME library working for your preferred cloud provider (Fly.io or others), you can start abstracting any functions that you’d like to auto-scale with a FLAME wrapper, like so:

export default flame(doSomeWork, ...)

Limitations of FLAME in JavaScript

The biggest limitation in the flow defined above comes down to the assumption that running our FLAME function is as simple as importing the file that contains it, and running the default module. This only works when you…

- Have your FLAME-wrapped code block as the

defaultexport of a file - Are not mixing CommonJS and ES Module syntax

- Are NOT using TypeScript 😢

It’s especially tricky with any transpiled code like TypeScript since it means you can’t simply import the file as is unless your HTTP server was using something like ts-node instead of node. For better performance, you could transpile all of your FLAME TypeScript code, but this would add extra files to your project that you may not want. If your application is using Bun or Deno, however, all these TypeScript problems are a non-issue, since those runtimes support it out of the box!

Using FLAME in a JavaScript framework

It’s entirely possible to drop the FLAME library into any JavaScript framework, such as Next.js, and have it work, provided that the above-mentioned prerequisites are satisfied. However, you’d have to ditch the TypeScript.

An alternative pattern could be running an exact copy of your web application, instead of using your app image and overriding the start command. In other words, instead of using a slimmed-down HTTP server on your runner Machines, you could stick with your standard npm run start (or whatever your start command is). This would likely require some extra configuration in your framework to handle the FLAME POST requests, however, so it’s not a perfect turn-key solution.

Cold starts & pooling

While Fly Machine cold starts are extremely fast, it still takes a few hundred milliseconds, so it’s still worth weighing the impact it has on performance. The code we’ve shown today will boot up a new runner Machine if none are found, which does incur cold start time. Luckily, it’s currently free (and soon, cheap) to have stopped Machines ready for near-instant start ups. For this reason, you may want to consider maintaining a pool of runner Machines that can quickly be started and stopped.

Autoscaling for request queuing

Now, even if we have a pool of runner Machines available at our beck and call, these could still become inundated with FLAME requests. A more production-ready implementation would need to have a way of scaling up runner Machines based on a given metric, such as response time.

Conclusion

Deploying on Fly.io makes this process even simpler by providing a convenient Machines API for scaling instances of your app. And while initial work of building a cloud-specific FLAME library requires a bit of work upfront, once done, you have an incredibly simple way of auto-scaling select tasks in your application.