Deploy now on Fly.io, and get your Laravel app running in a jiffy!

When we think about first steps in importing spreadsheet data, we usually think of uploading the file first to the server, before processing the import.

But. What if we didn’t need to upload the file at all?

In this article, we’ll import data from our spreadsheet file, but completely skip file upload to the server. Instead, we’ll directly send batches of data rows from our selected spreadsheet file in the browser to our server—easily and quickly, with the help of SheetJS CE, Web Workers, and Livewire!

Version Notice. This article focuses on Livewire v2, details for Livewire v3 would be different though!

The Setup

Here’s a Github Readme pointing to relevant files we’ll be using in our setup today.

To start off, create a Livewire component by running: php artisan make:livewire excel-importer. Our view should contain an input element users can upload spreadsheet files to, and a button they can click on to submit their file for processing:

<div>

<h1>Import Excel Data</h1>

<form onsubmit="return process()">

<input type="file" id="myFile" />

<input type="submit" />

</form>

</div>

Next, let’s add in SheetJS CE to our view. There’re various ways we can include SheetJS CE into our project, the quickest being the use of its “standalone browser script”:

<script lang="javascript" src="https://cdn.sheetjs.com/xlsx-latest/package/dist/xlsx.full.min.js" ></script>

Once the user selects a spreadsheet file, and clicks on the submit button, we’ll do the following:

- Extract row data from the file using SheetJS CE

- Send row data to the server using Livewire

- Validate row columns with Livewire’s validate method

- Queue a job to process the validated rows in the background

Along the way we’ll also make changes to possibly accommodate large file processing:

- Use of web workers to read and parse file

- Batched sharing of rows with Livewire

- Use of another library Papa Parse for csv files

Extracting and Parsing Data

When a user submits their file for processing, the process() function will be called. This reads the file’s content as an array of bytes, called “ArrayBuffer” using the FileReader api:

/* resources\views\livewire\excel-importer.blade.php */

<script>

// Reader and File Reference

var reader = new FileReader();

var fileElement = document.querySelector('#myFile');

function process(){

// Content as an ArrayBuffer

reader.readAsArrayBuffer(fileElement.files[0]);

Next, we’ll have to make sense of these “raw” bytes from our spreadsheet file, transform it into readable, row data. But how do we do that?

There are all kinds of spreadsheet types out there—CSVs, XLSXs, XLSs, and so forth—and they have different ways for parsing their content. Luckily for us, SheetJS CE accommodates parsing a multitude of spreadsheet file formats, and so, it’s one of our superstars for today. We’ll use it to parse spreadsheet content into an array of rows.

Specifically, we’ll use its magic parser, XLSX.read, to parse its raw content:

reader.onload = function(event) {

// Parse raw content

var workbook = XLSX.read( event.target.result );

// Release after read

event.target.result = null;

Spreadsheets can contain more than one sheet, and SheetJS CE handles this by storing sheet names in its SheetNames array. We can use each name as a key to access their corresponding sheet content. Then, with our sheet content available, we can format this to an array of arrays using XLSX.utils.sheet_to_json:

workbook.SheetNames.forEach(function(sheetName) {

// Current sheet

var sheet = workbook.Sheets[sheetName];

// Include header param to return array of arrays

var rows = XLSX.utils.sheet_to_json(sheet, {header:1});

});

} // End reader.onload

} // End process()

</script>

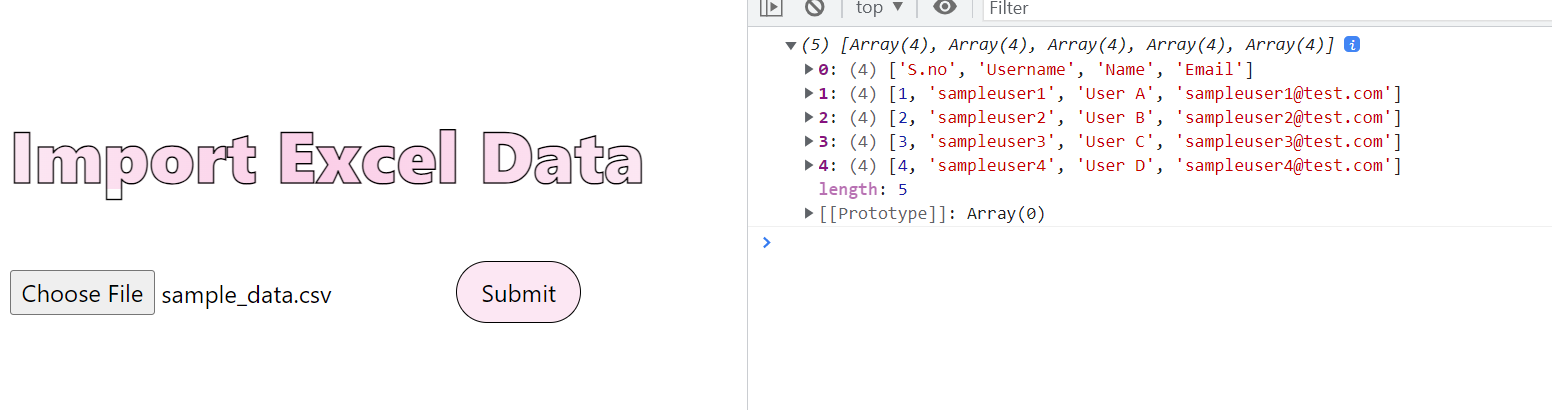

Passing {header:1} above to our call to sheet_to_json() allows us to receive the rows as an array of arrays. Of course, there are other various output formats, you can read more starting from this section. Here’s a sample output of our rows, as an array of arrays:

Now that we’ve extracted our data, it’s time to start our import with Livewire.

Sharing Data

Livewire offers a seamless bridge to share JavaScript-friendly data, and method calls between client and server. As a result, we don’t need to create a separate route and controller just for importing our data. We can simply call a method in the server component from client JavaScript, and pass certain data types, like so:

// Include header param to return array of arrays

var rows = XLSX.utils.sheet_to_json(sheet, {header:1});

rows.shift();// Remove header

+ @this.importData(rows)

+ rows = null; // Release

The above should make a call to the server triggering a method named importData, and pass the rows variable as a parameter. Here’s how the method will look in our component:

/* \app\Http\Livewire\ExcelImporter */

public function importData( $rows ){}

Fly.io ❤️ Laravel

Fly your servers close to your users—and marvel at the speed of close proximity. Deploy globally on Fly in minutes!

Deploy your Laravel app! →

Now that we have a hold of our imported rows in the server, we’ll have to make sure that they’re data we can process—it’s time for some validation.

Validating An Array of Arrays

Before we can process the rows received in our server, we’ll have to validate its column values first. Livewire reserves the $rules attribute in any Livewire controller, which we can use to add validation rules. We can validate each row in a generic manner like so: rows.*.

Now, let’s say, each 0th column within a row should always be available, and at the same time should be of some numeric value like:[[23],[12]]. To validate this rule, simply indicate the rule for the 0th index’s rule:

/* \app\Http\Livewire\ExcelImporter */

protected $rules = [

'rows.*.0' => 'required|numeric'

];

Once we’ve set up validation, let’s proceed with the pass or fail part. Livewire offers the validate() method to do a pass or fail on its public attributes’ rules listed in $rules.

In our case, our rule is intended for a rows attribute, and so we declare that as a public attribute, and set its value. Once it’s set with the imported data, we trigger validation on it:

+ public $rows;

public function importData( $rows ){

+ // Set rows attribute first

+ $this->rows = $rows;

+ // Now pass or fail

+ $this->validate();

+ // Don't send back rows in response

+ $this->rows = [];

}

We can catch any validation errors in our view like so:

@foreach( $errors->all() as $error )

<div> {{ $error }} </div>

@endforeach

And in case we want to record the errors in the server, simply use a try catch block:

/* \app\Http\Livewire\ExcelImporter */

try{

//$this->validate()

}catch(\Illuminate\Validation\ValidationException $e){

$this->rows = []; // Don't send back rows in response

foreach( $e->validator->errors()->all() as $error )

Log::info( 'found error'.$error );

throw $e;

}

Queued Processing of Imported Data

Processing rows of data can take some time to complete, and would send our users into a spiraling dread of pause before they can move forward from their import duty.

Instead of making our users wait for this prolonged pause, we’ll send data we received to a job, and queue this job for processing in the background. This allows us to promptly respond with a processing status, without waiting for the process to complete.

Create a job with php artisan make:job ImportExcelDataJob. This should generate our Job file at app\Jobs\ImportExceltDataJob. We can call this from our Livewire component like so:

Make sure to import App\Jobs\ImportExcelDataJob in our component!

/* app\Http\Livewire\ExcelImporter */

public function importData( $rows ){

$this->rows = $rows;

$this->validate();

$this->rows = [];

+ ImportExcelDataJob::dispatch( $rows );

}

Then, to receive our $rows argument in the job class, we pass it to the job’s constructor, and finally process its value in the handle method:

/* app\Jobs\ImportExceltDataJob */

public function __construct( protected $rows ){}

public function handle( \App\Http\Services\ExcelRowProcessor $processor )

{

foreach( $this->rows as $key=> $row )

$processor->process($key, $row);

}

See the $processor parameter? It’s a service class we can create to process our import.

Responding

Now that our data-processing logic is sent to the background queue, we can as easily dispatch an event to the browser from our server like so:

/* app\Http\Livewire\ExcelImporter */

public function importData( $rows ){

$this->rows = $rows;

$this->validate();

$this->rows = [];

ImportExcelDataJob::dispatch( $rows );

+ $this->dispatchBrowserEvent('import-processing');

}

We can easily listen to this import-processing event from the view JavaScript like so:

/* app/resources/views/livewire/excel-importer */

window.addEventListener('import-processing', event => {

alert('Data has been sent for processing!');

});

Reasons For Tradition

Earlier above, we briefly went over the “upload first, import later” tradition of importing spreadsheet data to our servers.

We might have circumvented this tradition today, but we must take note that there is pretty good reason why it has stood the test of time. One of the primary reasons hinge around the processing of large files.

Imagine parsing MB’s of spreadsheet data in the client browser—Aha, that’s going to be pretty laggy. Of course there are some “workarounds” to this:

Web Workers

SheetJS CE recommends the use of web workers to read large files. The idea is to simply enclose our “extract snippet” into a “web worker” object that will run a separate process away from client browser to process the snippet.

Declare a Worker object called mWorker, and enclose it with our “extract” snippet:

var mWorker = new Worker(URL.createObjectURL(new Blob([`\

// Import SheetJS CE in web worker

importScripts("https://cdn.sheetjs.com/xlsx-latest/package/dist/xlsx.full.min.js");

self.addEventListener('message', (e) => {

// The file we passed is read as "e.data"

const reader = new FileReader();

reader.readAsArrayBuffer(e.data);

// Parse file

reader.onload = function(event) {

// Parsed content

var workbook = XLSX.read(event.target.result);

event.target.result = null;

// Go through each sheet

workbook.SheetNames.forEach(function(sheetName) {

// Current sheet

var sheet = workbook.Sheets[sheetName];

// Include header param to return array of arrays

var param = {header:1};

var rows = XLSX.utils.sheet_to_json(sheet, param);

rows.shift();

});

}

});

`]) ));

Since a web worker is separate from our browser, the configuration/scripts/variables in the browser’s script is not included in the web worker. This means we can’t use Livewire’s @this.import() method from the web worker.

Instead, we’ll send back rows data from the worker to the browser. We can do so through its postMessage interface.

/* Inside web worker's reader.onload codeblock: */

workbook.SheetNames.forEach(function(sheetName) {

// Parsed content

var workbook = XLSX.read(event.target.result);

// Include header param to return array of arrays

var param = {header:1};

var rows = XLSX.utils.sheet_to_json(sheet, param);

rows.shift();

+ // Pass a sheet's rows back to browser

+ postMessage({ rows });

});

Now that we have our mWorker setup, revise the process() function to pass a selected file to this worker, with the help of its postMessage:

function process(){

mWorker.postMessage(fileElement.files[0]);

return false;

}

Once the mWorker emits postMessage from its process, our browser’s script can listen to this, and add the rows as an item to a list of sheet rows:

let sheetRows = [];

mWorker.onmessage = function(e) {

sheetRows.push( e.data.rows );

};

Batched Sharing of Rows

Our server has limits on the request size it can receive, and so we can’t simply just send the entire e.data.rows received above. Instead we’ll call another function, batchSend:

var sheetRows = [];

mWorker.onmessage = function(e) {

sheetRows.push( e.data.rows );

+ batchSend( sheetRows.length-1 );

};

And from there, “batch” the number of rows we send to our importData method likeso:

A batch will have a start and end index, forming a sub range from the sheet’s original array of rows.

function batchSend( index ){

// Some truths our batching depends on for this sheet

var batchSize = 100;

var rowSize = sheetRows[index].length;

// Get batch range

var start = 0;

var end = Math.min( start + batchSize, rowSize );

// Get sliced rows range for current sheet

var range = sheetRows[index].slice( start, end );

// Remove range from our current sheet, since `range` holds it

sheetRows[index] = sheetRows[index].slice( end, rowSize );

// Send range to server

@this.importData( range, index );

}

Notice how we update sheets[index] with a smaller version of its array with each range sent to the server? As that range has already been sent to the server, we can remove it from our reference.

Now that the sheet’s first batch of rows has been sent to the server, it’s time to send the next batch in. To do so, we’ll have to pass the current sheet’s $index as parameter to importData() in the component. And include that index in the batch-processed event:

public function importData( $rows, $sheetIndex )

{

$this->rows = $rows;

$this->validate();

$this->rows = [];

// Include sheetIndex to the job and service class as well...

ImportExcelDataJob::dispatch( $rows, $sheetIndex );

+ $this->dispatchBrowserEvent('import-processing',[

'sheetIndex' => $sheetIndex

]);

}

Then from our import-processing listener in JavaScript, send in the sheet’s next batch:

window.addEventListener('import-processing',function(event){

var sheetIndex = event.detail.sheetIndex;

if( sheetRows[sheetIndex].length > 0 ){

batchSend( sheetIndex );

}else{

alert( sheetIndex + 'Sheet\'s Data sent for processing!');

}

});

Papa Parse For CSVs

If we’re only accepting CSV files, we can use another package to parse data more efficiently. Papa Parse is a fast CSV parser for JavaScript, and, it can stream our file for reading, significantly reducing memory usage!

<script src="https://cdnjs.cloudflare.com/ajax/libs/PapaParse/5.4.1/papaparse.min.js" ></script>

function papaParse( file ){

// Create a sheet in our list

sheetRows.push([]);

// Stream Read file

Papa.parse(file, {

// Read file row by row through step

step: function(row) {

// Always at 0 since CSVs can only have one sheet

sheetRows[0].push(row);

},

complete: function() {

// Once all rows are added, start batch-sending the rows

batchSend( 0 );

}

});

}

Looking Back on Tradition

Today we learned to import data directly from client to server using JavaScript packages, and Livewire. Along the way, we also learned why we have the “upload first, import later” tradition in importing data. It’s not cool to burden user’s machines with parsing large files.

Of course, there are some ways to help alleviate burden from our client browser—like using web workers, or using stream reading to parse sections of our file at a time.

To upload, or not to upload? Well—after reading through this article, if uploading the file sounds the better route, large files will still cause some 413 errors during upload. But, when there’s a will, there’s a way! Why not try uploading the file, in chunks?