Phoenix LiveView lets you build interactive, real-time applications without dealing with client-side complexity. This is a post about the guts of LiveView. If you just want to ship a Phoenix app, the easiest way to learn more is to try it out on Fly.io; you can be up and running in just a couple minutes.

LiveView started with a simple itch. I wanted to write dynamic server-rendered applications without writing JavaScript. Think realtime validations on forms, or updating the quantity in a shopping cart. The server would do the work, with the client relegated to a dumb terminal. It did feel like a heavy approach, in terms of resources and tradeoffs. Existing tools couldn’t do it—but I was sure that this kind of application was at least possible. So I plowed ahead.

Three years later, what’s fallen out of following this programming model often feels like cheating. “This can’t possibly be working!”. But it does. Everything is super fast. Payloads are tiny. Latency is best-in-class. You write less code. More than that: there’s simply less to think about when writing features.

This blows my mind! It would be fun to say I’d envisioned ahead of time that it would work out like this. Or maybe it would be boring and pompous. Anyway, that’s not how it happened.

To understand how we arrived where we are, we’ll break down the ideas in the same way we evolved LiveView to what it is today: we’ll start with a humble example, then we’ll establish the primitives LiveView needed to solve it. Then we’ll see how, almost by accident, we unlocked a particularly powerful paradigm for building dynamic applications. Let’s go.

A Humble Start

We’ll start small. Say you want to build an e-commerce app where you can update the quantity of an item like a ticket reservation. Ignoring business logic, the actual mechanics of the problem involve updating the value on a web page when a user clicks a thing. A counter.

This shouldn’t be difficult. But we’re used to navigating labyrinths of decisions, configuring build tools, and assembling nuts and bolts, to introduce even the simplest business logic. We go to build to a counter, and first we must invent the universe. Does it have to be this way?

Conceptually, what we really want is something like what we do in client-side libraries like React: render some dynamic values within a template string, and the UI updates with those changed values. But instead of a bit of UI running on the client, what if we ran it on the server? The live view might look like this:

def render(assigns) do

~H"""

<span><%= ngettext("One item left", "%{count} items left", @remaining) %></span>

<%= if @remaining > 0 do %>

<button phx-click="reserve"><%= gettext("reserve") %></button>

<% else %>

<%= gettext("Sorry, sold out!") %>

<% end %>

"""

end

def mount(%{"id" => id}, _session, socket) do

{:ok, assign(socket, remaining: 10, id: id)}

end

We can render a template and assign some initial state when the live view mounts.

Next, our interface to handle UI interactions could look something like traditional reactive client frameworks. Your code has some state, a handler changes some state, and the template gets re-rendered. It might look like this:

def handle_event("reserve", _, socket) do

%{current_user: current_user, id: id} = socket.assigns

case Reservations.reserve_ticket(current_user, id) do

{:ok, remaining} ->

{:noreply,

socket

|> put_flash(:info, gettext("1 ticket reserved!"))

|> assign(:remaining, remaining)}

{:error, :nostock} ->

{:noreply, assign(socket, :remaining, 0})

end

end

UI components are necessarily stateful creatures, so we know we’ll need a stateful WebSocket connection with Phoenix Channels which can delegate to our mount and handle_event callbacks on the server.

We want to be able to update our UI when something is clicked, so we wire up a click listener on phx-click attributes. The phx-click binding will act like an RPC from client to server. On click we can send a WebSocket message to the server and update the UI with an el.innerHTML = newHTML that we get as a response. Likewise, if the server wants to send an update to us, we can simply listen for it and replace the inner HTML in the same fashion. The first pass would look like this on the client:

let main = document.querySelector("[phx-main]")

let channel = new socket.channel("lv")

channel.join().receive("ok", ({html}) => main.innerHTML = html)

channel.on("update", ({html}) => main.innerHTML = html)

window.addEventListener("click", e => {

let event = e.getAttribute("phx-click")

if(!event){ return }

channel.push("event", {event}).receive("ok", ({html}) => main.innerHTML = html)

})

This is where LiveView started—go to the server for interactions, re-render the entire template on state change, send the entire thing down to the client, and replace the UI.

It works, but it’s not great.

There’s a lot of redundant work done on the server for partial state changes, and the network is saturated with large payloads to only update a number somewhere on a page.

Still, we have our basic programming model in place. It only takes annotating the DOM with phx-click and a few lines of server code to dynamically update the page. Excited, we ignore the shortcomings of our plumbing and press on to try out what we’re really excited about – realtime applications.

Things get realtime

Phoenix PubSub is distributed out of the box. We can have our LiveView processes subscribe to events and react to platform changes regardless of what server broadcasted the event. In our case, we want to level up our humble reservation counter by having the count update as other users claim tickets.

We get to work and crack open our Reservations module:

defmodule App.Reservations do

...

def subscribe(event_id), do: Phoenix.PubSub.subscribe("events:#{event_id}")

def reserve_ticket(%User{} = user, %Event{id: id}) do

...

broadcast(id, :reserved, %{event_id: id, remaining: remaining}})

end

defp broadcast(id, event, msg) do

Phoenix.PubSub.broadcast(App.PubSub, "events:#{id}", {__MODULE__, event, msg})

end

end

We add a subscription interface to our reservation system, then we modify our business logic to broadcast a message when reservations occur. Next, we can listen for events in our LiveView:

def mount(%{"id" => id}, _session, socket) do

if connected?(socket), do: Reservations.subscribe(id)

{:ok, assign(socket, remaining: 2, id: id)}

end

def handle_info({Reservations, :reserved, %{remaining: remaining}}, socket) do

{:noreply, assign(socket, remaining: remaining)}

end

First, we subscribe to the reservation system when our LiveView mounts, then we receive the event in a regular Elixir handle_info callback. To update the UI, we simply update our state as usual.

Here’s what’s neat – now whenever someone clicks reserve, all users have their LiveView re-render and send the update down the wire. It cost us 10 lines of code.

We test it out side-by-side in two browser tabs. It works! We start doubting the scalability of our naive approach, but we marvel at what we didn’t write.

Phoenix screams on Fly.io.

Phoenix is a win anywhere. But Fly.io was practically born to run it. With super-clean built-in private networking for clustering and global edge deployment, LiveView apps feel like native apps anywhere in the world.

Deploy your Phoenix app in minutes. →

Happy accident #1: HTTP disappears

To make the reservation count update on all browsers, we only wrote a handful of lines of server code. We didn’t even touch the client. The existing LiveView primitives of a dumb bidirectional pipe pushing RPC and HTML were all we needed to support cluster-wide UI updates.

Think about that.

There was no library or user-land JavaScript to add. Our reservation LiveView didn’t even consider how the data makes it to the client. Our client code didn’t have to become aware of out-of-band server updates because we already send everything over WebSockets.

And a revelation hits us. HTTP completely fell away from our thoughts while we implemented our reservation system. There were no routes to define for updates, or controllers to create. No serializers to define for payload contracts. New features are now keystrokes away from realization.

Unfortunately, this comes at the cost of server resources, network bandwidth, and latency. Broadcasting updates means an arbitrary number of processes are going to recompute an entire template to effectively push an integer value change to the client. Likewise, the entire templates string goes down the pipe to change a tiny part of the page.

We know there’s something special here, but we need to optimize the server and network.

Making it fast

Optimizing the server to compute minimal diffs is some of the most complex bits of the LiveView codebase, but conceptually it’s quite simple. Our optimizations have two goals. First, we only want to execute those dynamic parts of a template that actually changed from the previous render. Second, we only want to send the minimal data necessary to update the client.

We can achieve this in a remarkably simple way. Rather than doing some advanced virtual DOM on the server, we simplify the problem. An Elixir HTML template is nothing more than a bunch of HTML tags and attributes, with Elixir expressions mixed in the places we want dynamic data.

Looking at it from that direction, we can optimize simply by splitting the template into static and dynamic parts. Considering the following LiveView template:

<span class={@class}>Created: <%= format_time(@created_at) %></span>

At compile time, we can compile the template into a datastructure like this:

%Phoenix.LiveView.Rendered{

static: ["<span class=\"", \">Created :", "</span>"]

dynamic: fn assigns ->

[

if changed?(assigns, :class), do: assigns.class,

if changed?(assigns, :created_at), do: format_time(assigns.created_at)

]

end

}

We split the template into static and dynamic parts. We know the static parts never change, so they are split between the dynamic elixir expressions. For each expression, we compile the variable access and execution with change tracking. Since we have a stateful system, we can check the previous template values with the new, and only execute the template expression if the value has changed.

Instead of sending the entire thing down on every change, we can send the client all the static and dynamic parts on mount, then only send the partial diff of dynamic segments for each update.

We can do this by sending the following payload to the client on mount:

{

s: ["<span class=\"", \">Created :", "</span>"],

0: "active",

1: "2022-04-27"

}

The client receives a simple map of static values in the s key, and dynamic values keyed by the index of their location in the template. For the client to produce a full template string, it simply zips the static list with the dynamic segments, for example:

["<span class=\"", "active", "\">Created :", "2022-04-27", "</span>"].join("")

"<span class="active">Created: 2022-04-27</span>

With the client holding the initial payload of static and dynamic values, optimizing the network on update becomes easy. The server now knows which dynamic keys have changed, so when a state change occurs, it renders the template, which lazily executes only the changed dynamic segments. On return, we receive a map of new dynamic values keyed by their position in the template. We then pass this payload to the client.

For example, if the LiveView performed assign(socket, :class, "inactive"), the following diff would fall out of the render/1 call and be sent down the wire:

{0: "inactive"}

Thats it! And to turn this little payload back into an updated UI for the client, we only need to merge this object with our static dynamic cache:

{ {

s: ["<span class=\"", \">Created :", "</span>"],

0: "inactive" => 0: "active",

1: "2022-04-27"

} }

Then we zip the merged data together and now our new HTML can be applied like before via an innerHTML update.

Replacing the DOM container’s innerHTML works, but wiping out the entire UI on every little change is slow and problematic. To optimize the client, we can pull in morphdom, a DOM diffing library that can take two DOM trees, and produce the minimal amount of operations to make the source tree look like the target tree. In our case, this is all we need to close the client/server optimization loop.

We build a few prototypes and realize we’ve created something really special. Somehow our naive heavy templates are more nimble than those React and GraphQL apps we used to sling. But How?

Happy accident #2: best in class payloads, free of charge

One of the most mind blowing moments that fell out of the optimizations was seeing how naive template code somehow produced payloads smaller than the best hand rolled JSON apis and even sent less data than our old GraphQL apps.

One of the neat things about GraphQL is the client can ask the server for only the data it wants. Put simply, the client can ask for a user’s username and birthday, and it won’t be sent any other keys of the canonical user model. This is super powerful, but it must be specified on the server via typed schemas to work.

How then, does our LiveView produce nearly keyless payloads with no real typed information?

The answer is it was mostly by accident. Going back to our static/dynamic representation in the Phoenix.LiveView.Rendered struct, our only goal initially was thinking about how to represent a template in a way that allowed us to avoid sending all the markup and HTML bits that don’t change. We weren’t thinking about solving the problem of client/server payload contracts at all.

There’s a lesson here that I still haven’t fully unpacked. In the same way as a user of LiveView I stopped concerning myself with HTTP client/server contracts, as an implementer of LiveView, I also had moved on from thinking about payload contracts. Yet somehow this dumb bidirectional pipe that sends RPC’s and diffs of HTML now allows users to achieve best in class payloads without actually spec'ing those payloads. This still tickles my mind.

One of the other really interesting parts of the LiveView journey is how the programming model never changed beyond our initial naive first-pass. The code we actually wanted to write in the beginning never needed to change to enable all these powerful optimizations and code savings. We simply took that naive first-pass and kept chipping away to make it better. This lead to other happy accidents.

Happy accident #3: lazy loading and bundle-splitting without the bundles

As we built apps, we’d examine payloads and find areas where more data would be sent than we’d like. We’d optimize that. Rinse and repeat. For example, we realized the client often receives the same shared fragments of static data for different parts of the page. We optimized by sending static shared fragments and components only a single time.

Imagine our surprise on the other side of finishing these optimizations when we realized we solved a few problems that all client-side frameworks must face, without the intention of doing so.

Build tools and client side frameworks have code splitting features where developers can manage how their various JavaScript payloads are loaded by the client. This is useful because bundle size is ever increasing as more templates and features are added. For example, if you have templates and associated logic for a subset of users, your bundle will include all supporting code even for users who never need it. Code splitting is a solution for this, but it comes at the cost of complexity:

- Developers now have to consider where to split their bundles

- The build tools must be configured to perform code splitting

- Developers have to refactor their code to perform lazy loading

With our optimizations, lazy-loading of templates comes free for free, and the client never gets a live component template it already has seen from the server. No bundling required, or build tools to configure.

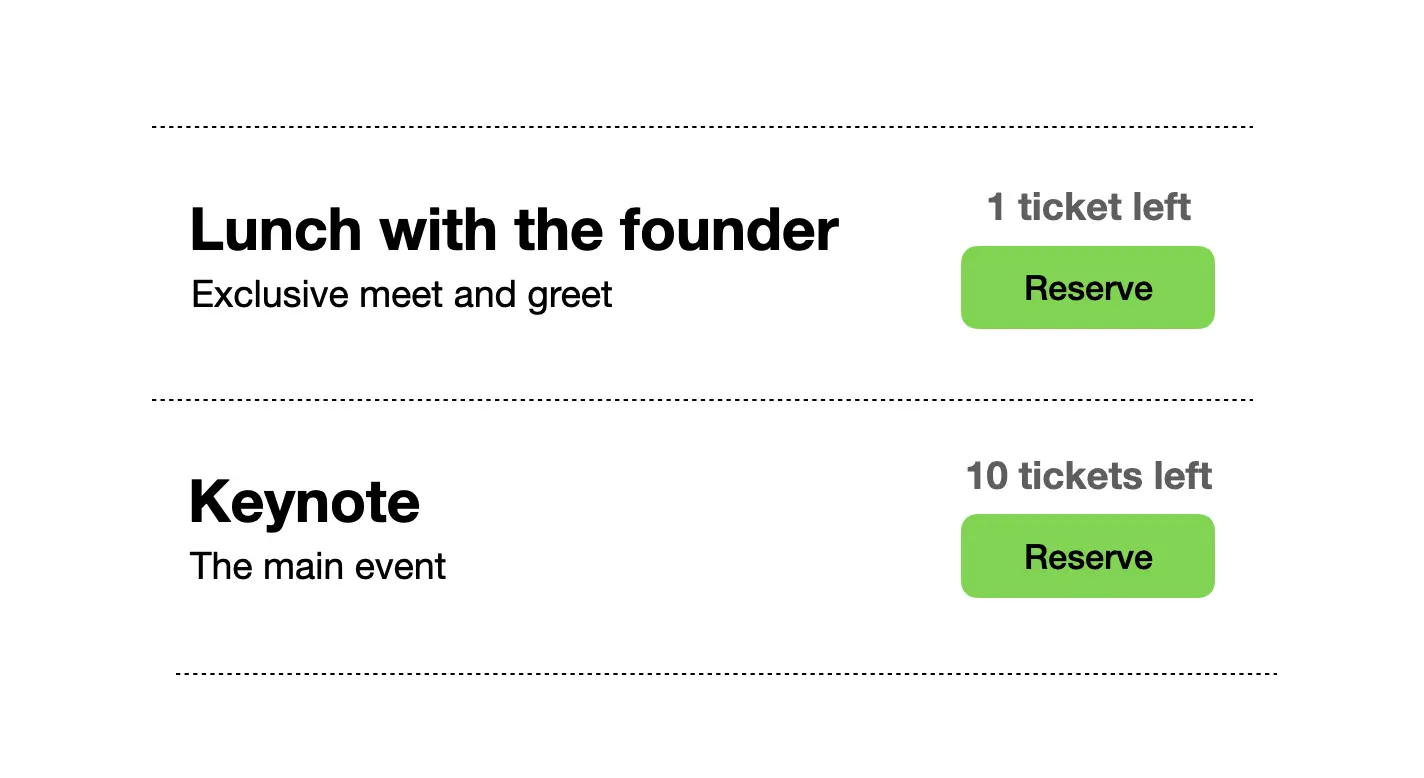

Here’s how it works. Imagine we want to update our reservation system to render a list of available events that can be reserved. It might look something like this:

Which we could render with a reusable reserve_item component:

def render(assigns) do

~H"""

<%= for event <- @events do %>

<.reserve_item event={event}/>

<% end %>

"""

end

defp reserve_item(assigns) do

~H"""

<div class="m-2 p-2 border-y-2 border-dotted">

<%= ngettext("One ticket left", "%{count} tickets left", @event.remaining) %>

<%= if @event.remaining > 0 do %>

<.button phx-click={JS.push("reserve", value: %{id: @event.id})}>

<%= gettext("reserve") %>

</.button>

<% else %>

<%= gettext("Sorry, sold out!") %>

<% end %>

</div>

"""

end

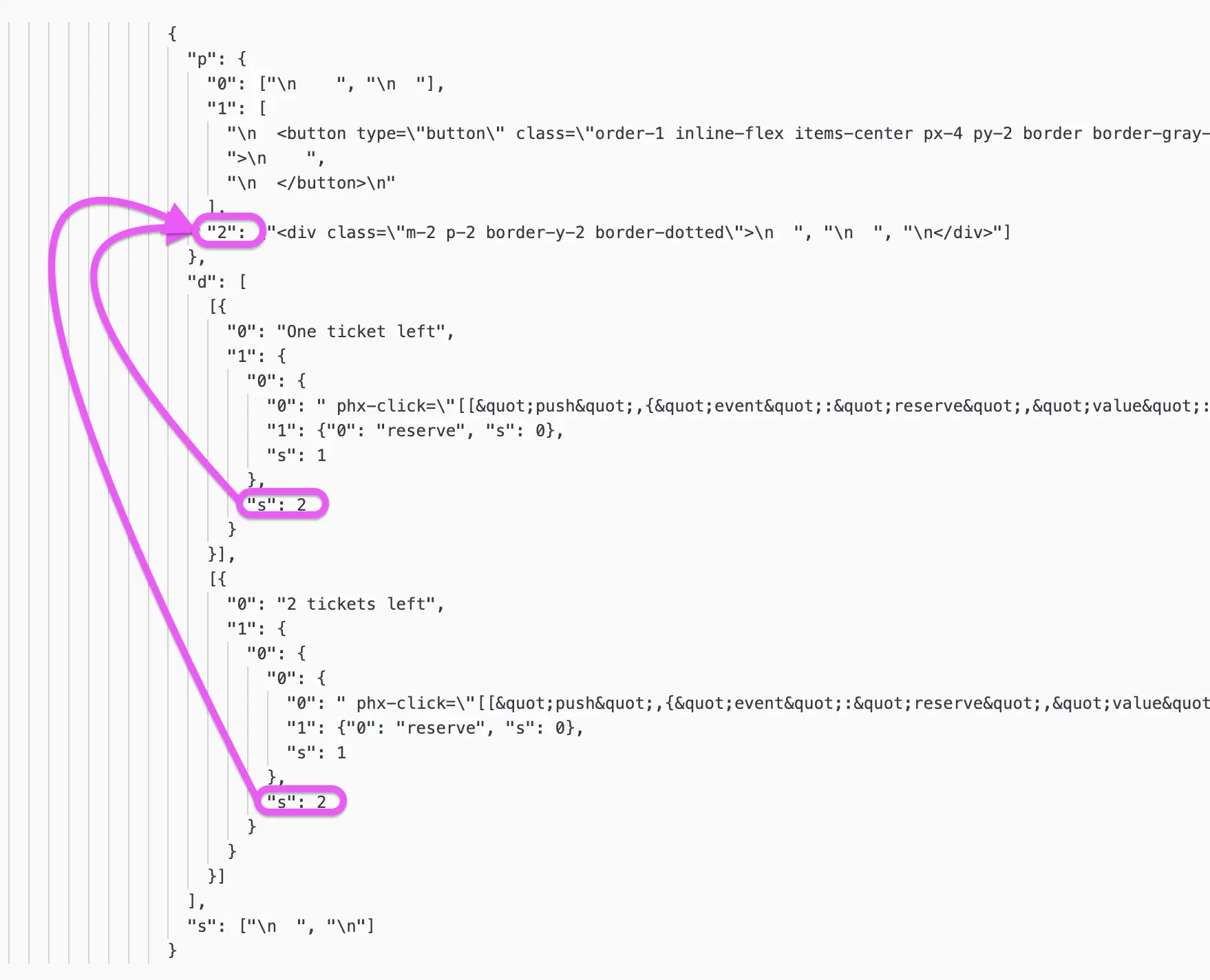

We modified our initial reserve template to render a reservation button from a list of events. When LiveView performs its diffing, it recognizes the use of the shared statics and the following diff is sent down wire:

Note the "p" key inside the diff. It contains a map of shared static template values that are referenced later for each dynamic item. When LiveView sees a static ("s") reference an integer, it knows that it points to a shared template value. LiveView also expands on this concept when using a live component, where static template values are cached on the client, and the template is never resent because the server knows which templates the client has or hasn’t seen.

Even with our humble reservation counter, there are other bundling concerns we skipped without noticing. Our template used localization function calls, such as <%= gettext("Sold out!") %>. We localized our app without even thinking about the details. For a dynamic app, you’d usually have to serialize a bag of localization data, provide some way to fetch it, and code split languages into different bundles.

As your LiveView application grows, you don’t concern yourself with bundle size because there is no growing bundle size. The client gets only the data it needs, when it needs it. We arrived here without every thinking about the problem of client bundles or carefully splitting assets because LiveView applications hardly have client assets.

Less code, fewer moving parts

The best code is the code you don’t have to write. In an effort to make a counter on a webpage driven by the server, we accidentally arrived at a programming model that sidesteps HTTP. We now have friction-free dynamic feature development with push updates from the server. Our apps have lower latency than those client apps we used to write. Our payloads are more compact than those GraphQL schemas we carefully constructed.

LiveView is, at its root, just a way to generate all the HTML you need on the server, so you can write apps without touching JS. More dynamic apps than you might expect, thanks to Elixir’s concurrency model. But even though I wrote the internals of it, I’m still constantly blown away by how well things came together, and finding surprising new ways to apply it to application problems.

All this from a hack that started with 10 lines of JavaScript pushing HTML chunks down the wire.