We’re Fly.io. We run apps for our users on hardware we host around the world. Fly.io happens to be a great place to run Phoenix applications. Check out how to get started!

I love ExDocs, I think they are one of the best parts about the Elixir Ecosystem and I frankly cannot shut up about it. My one complaint is that it can sometimes be hard to find exactly what you are looking for using ExDoc’s built in search.

My favorite example is that I know that Ecto.Repo has a function to dump the generated SQL to a string, but if you search inside of Ecto you get nothing. That’s because it’s in a different project called ecto_sql. What if we had one spot we could go to and search all of HexDocs?

In this post we will walk through how I downloaded, cleaned up, indexed and searched all of HexDocs using SQLite FTS5 and LiveView! It turned into a project that matches the real world incredibly well, warts and all! So I won’t be walking through every single step, but check it out on Github and try it out hosted here on Fly.io, let’s begin!

Downloadin’ Hex Docs

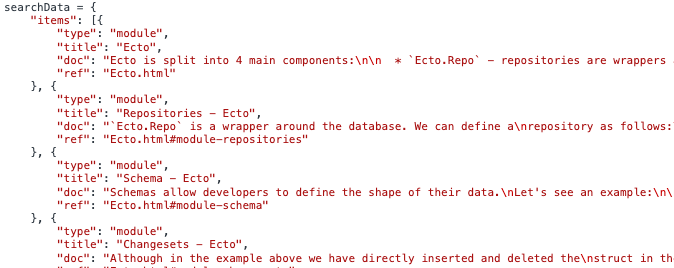

When you visit a ExDoc package and try using the search box, what you are using is a fully in-browser search experience provided by Lunrjs. When the ExDoc generated page is first visited it downloads a JavaScript payload that contains the “Search Data”, which is then indexed in the browser via Lunrjs, compressed and stored in the browser SessionStorage. Then there is some more JavaScript that does the autocomplete and rendering of the search fully in the browser.

We’re going to take advantage of that Search Data that comes with every hex package, and it looks like this:

ExDoc generates this for every package and uploads it to HexDocs for you. And because ExDoc has a special place in the world of Elixir, every Elixir project depends on it, including Elixir. So it can’t really depend on any project that’s not Elixir or fully vendored, like Lunr.js.

Which means that when ExDoc creates the above searchData.js file it has to encode the JSON directly. The good news is the “items” all have the same document shape, the, meh news is this filename is different for every deploy.

Garden Variety Extract, Transform and Load Pipeline

This project started as a fun project that scratches an itch for me, but building out an ETL pipeline is as “Real World” as programming gets. Much like the “Real World” this part of the project kept expanding in scope as I went along.

So we don’t get lost in the weed’s here is a little road map to keep us on tract.

E. Use the Hex API get a listing of all the packages

E. Scrape the Search Page of every package that has documentation looking for an script tag that contains search_items and download that file

T. We’re going to strip off all JavaScript in that file till we just have json, and then clean it up.

T. We’re going to parse that JSON using Jason, if that fails use a hand rolled Json parser that works with the cases that don’t work with Jason.

L. Store this into a SQLITE Table

E: Get the data

Enum.reduce_while(1..500, [], fn page, acc ->

data = API.list!(%{page: page, sort: "updated_at"})

current = Enum.count(data)

if current < 100 do

{:halt, [data | acc]}

else

{:cont, [data | acc]}

end

end)

|> Kernel.++(special_packages())

|> List.flatten()

We’re hitting the Hex API page by page and downloading all the data till we get a page less than size 100. I’m also adding in some “special packages” which are just Elixir, Eex, ExUnit, IEX, Logger, and Mix that are hosted on hex but not served via the API. Standard stuff here!

You’ll notice I’m just letting errors happen instead of handling them, this is a “for fun” project but its also practical as it stop’s this process as soon as something fails so I don’t need to re-run it a ton of times.

E: Scrape

def scrape_json(pkg)

pkg["docs_html_url"] <> "search.html"

|> grab_html(search_url)

|> grab_json(["search_items", "search_data"], url)

end

def grab_html(url) do

{:ok, document} = Req.get!(url).body

|> Floki.parse_document()

document

end

def grab_json(html, fnames, url) do

html

|> Floki.attribute("script", "src")

|> Enum.find(fn str ->

Enum.any?(fnames, fn fname ->

String.contains?(str, fname)

end)

end)

|> fetch_json(url)

end

defp fetch_json(nil, _), do: nil

defp fetch_json(path, url) do

Req.get!(URI.encode(url <> path)).body

end

We grab the search.html, parse the HTML using Floki, look for script tags that contain the file_name and then download the that JavaScript File. Pretty straight forward so far!

T: JSON

While reading this next section is it important to note that given the constraints I think the Elixir Team did an absolutely incredible job with ExDoc. Engineering is about working within constraints and they killed it. You would be hard-pressed to find any language that gives you machine-readable docs at all for any reason.

Here is where things get a little in the weeds, remember when I said different versions of ExDoc can produce different versions of that JavaScript? Well, it also hasn’t always produced “standards” compliant JSON. Thanks to the incredible engineering of Browsers and JavaScript engines, that doesn’t matter. In our case Jason expects to work with standards compliant JSON… Suffice to say ExDoc has been patched and a bunch of the following wouldn’t be necessary with latest ExDoc generated docs.

First we gotta cleanup all the JavaScript from around the JSON that we’re after:

def cleanup(<<"<", _::binary>>), do: "" # Html or something

def cleanup(nil), do: ""

def cleanup(str) do

str = str

|> String.trim()

|> String.trim_leading("searchNodes=")

|> String.trim_leading("searchData = ")

|> String.trim_leading("searchData=")

|> String.trim_leading("searchNodes = ")

|> String.trim_leading("sidebarNodes=")

|> String.trim_leading("sidebarNodes = ")

|> String.trim_leading("var versionNodes = ")

|> String.trim_leading("var versionNodes=")

|> String.trim_leading("versionNodes=")

|> String.trim_leading("versionNodes = ")

|> String.trim_trailing(";\nfillSidebarWithNodes(sidebarNodes);")

|> String.trim_trailing(";")

|> String.trim()

|> cleanup2()

end

This is pretty self-explanatory, over a decade the JS has had many forms and come with many functions. This next part is a little strange…

def cleanup2(str) do

case Regex.named_captures(~r/\<\<(?<binary>[\d\,\s]*)...\>\>/, str) do

nil -> str

%{"binary" => bin} ->

ha = bin

|> String.trim()

|> String.trim_trailing(",")

|> String.split(", ")

|> Enum.map(fn str -> <<String.to_integer(str)>> end)

|> Enum.join("")

Regex.replace(~r/\<\<([\d\,\s]*)...\>\>/, str, "\""<>ha<>"\"")

end

end

Remember when I mentioned that the JSON isn’t spec compliant? Well this is an example where certain types of binary strings weren’t being encoded correctly, I am sharing it here for posterity, but its been fixed.

I am not proud of what happens next but ExDoc has a hand built JSON Encoder, and for most of its existence it encoded the values by using the Inspect protocol. If everything lines up correctly, Jason will work, if not you need to parse it manually.

def try_decode(nil), do: nil

def try_decode(""), do: ""

def try_decode(str) do

str = cleanup(str)

try do

Jason.decode!(str)

rescue

e ->

Logger.error(e)

try do

decode!(str)

rescue

e ->

Logger.error(e)

str

end

end

end

So this is the part of the post where I hand rolled a JSON Decoder. And because I feel the need to explain the nested try rescues’, sometimes when you write some ugly code you want it to look ugly to match that.

First we try Jason.decode!, which works most of the time and is excellent, and should work for all new packages! If that fails, then we try my handle rolled decode! function. And if that fails, it’s not going to be indexed.

I won’t get into the meat of the functions, it’s here, but it tokenizes the JSON using a regex, and then recursively walks it into Lists and Maps. Which might feel anti-climatic but I don’t recommend anyone do this unless forced to do this for a simple for fun project.

L: SQLite

schema "packages" do

field :meta, :map

field :name, :string

field :docs_html_url, :string

field :downloads_all, :integer, default: 0

field :downloads_day, :integer, default: 0

field :downloads_recent, :integer, default: 0

field :downloads_week, :integer, default: 0

field :latest_docs_url, :string

field :html_url, :string

field :latest_stable_version, :string

field :last_pulled, :utc_datetime

field :search_items_json, {:array, :map}

field :sidebar_items_json, :map

field :docs_config_json, {:array, :map}

timestamps()

end

Here is the Ecto Package schema. We won’t dig too much into this, but we also grabbed some metadata that might be relevant later, and 2 other JSON objects that might come in handy later.

An important aspect of any ETL pipeline is that during the Load stages, you want to store as much data as you can within the constraints of your system. In this case, once we’ve downloaded all the data AND created an FTS index the SQLite db filesize is under 500mb, which is totally fine. And you never know what data might come in handy in the future:

- a new design comes down that requires us to show downloads

- turns out sidebar_items has cleaner data for search

- we want to update our index and only want packages that haven’t been updated recently

Some might argue to only store the minimum, that’s true for sensitive or extremely tight memory budgets, but in this case it’s neither! Alternatively we could have only created columns for data we care about, and have columns that store the raw JSON data.

Indexing

This is the part of the post where all the “hard” stuff is behind us, in retrospect I wouldn’t have estimated that simply collecting the data would have been 90% of the time spent and that is because ETL work is very difficult to estimate. In the “Real World” you really have to pad your estimates when working with outside data. Sometimes you get data in clean, standards compliant format with libraries to parse it, other times you get a CSV exported from Excel 97 with unknown quoting.

We have the data, it is loaded up into SQLite and cleaned up, lets make an index! I’ve gone into this a little bit before but here is my migration:

def up do

execute """

CREATE VIRTUAL TABLE packages_index USING fts5(

doc,

title,

type,

ref UNINDEXED,

package_id UNINDEXED,

tokenize="porter")

"""

end

def down do

drop table("packages_index")

end

I wish I had a better answer here for why I went with the porter tokenizer but I don’t, after a little experimentation I went with this one and it worked. You will need to make sure to keep this schema PackageIndex in sync with Packages but that’s not so bad if you are using context’s.

Searching

Just using this the default way ends up with pretty poor search results. A good example is trying out “Ecto.Query.preload” using the basic syntax:

from(i in PackageIndex, where: fragment("packages_index MATCH ?", ^"Ecto.Query.preload", order_by: [asc: "rank"]))

You will find that packages that use Ecto.Query.preload will come up before Ecto.Query.Preload because they reference this exact string more often than the Ecto.Query doc’s do. This won’t do for what I want:

Better Search

def search(term) do

term =

term

|> String.trim()

|> String.trim_trailing(".")

|> String.replace(" " , "+")

term_quoted = "\"#{term}\""

base = from(i in PackageIndex,

select: %{ i |

function_rank: fragment("bm25(packages_index, 10.0, 5.0, 1.0)"),

module_rank: fragment("bm25(packages_index, 5.0, 10.0, 1.0)"),

},

where: fragment("packages_index MATCH ?", ^term_quoted)

)

preload_query = from(p in Package, select: [:id, :name, :latest_stable_version, :docs_html_url])

# See Part 2

end

SQLite FTS5 is a sharp tool if you query something and don’t manually quote it, it will blow up with incomprehensible errors. We also want spaces to be inclusive so we replace them with +. I stripe off ending . because it will return no results if there is an ending period.

Then we set up a base query, with a function_rank, module_rank and a where clause limiting us to the search term. SQLite uses the bm25 algorithm for estimating a documents relevance based on a query. In this case, the function_rank weighs the the function name column higher than all the rest. The same for the module column in module_rank. And then we setup a preload function to only query the fields we want, and leave out all the JSOn.

# part 2

q = from(i in subquery(base),

join: p in assoc(i, :package),

select: %{ i |

rank: fragment("

CASE

WHEN (lower(?) = lower(?) and lower(?) = lower(?)) THEN -5000.0

WHEN ? = ? and (lower(?) = lower(?) or lower(?) = lower(?)) THEN -2000.0

WHEN ? = ? and ? = ? and instr(lower(?), lower(?)) = 1 and abs(length(?) - length(?)) < 3 THEN -1000.0

WHEN ? <> 'module' and instr(lower(?), lower(?)) = 1 and abs(length(?) - length(?)) < 3 THEN -500.0

WHEN instr(lower(?), lower(?)) = 1 THEN -30.0

WHEN instr(lower(?), lower(?)) = 1 THEN -20.0

ELSE 0.0

END + ? + ? + ?",

p.name, ^term,

i.title, ^term,

i.type, "module",

p.name, ^term,

i.title, ^term,

i.type, "module",

p.name, ^term,

i.title, ^term,

i.title, ^term,

i.type,

i.title, ^term,

i.title, ^term,

p.name, ^term,

i.title, ^term,

"function_rank", "module_rank", i.rank

)

}

)

q2 = from(i in subquery(q),

select: %PackageIndex{

id: i.id,

title: i.title,

type: i.type,

package_id: i.package_id,

ref: i.ref,

rank: i.rank

},

order_by: [asc: i.rank],

limit: ^limit

)

q2

|> Repo.all()

|> Repo.preload(package: preload_query)

Here is where the meat of the “better search” query is, in a massive CASE statement. I won’t go into every term, but basically we will boost packages that meet certain criteria from most to least:

- Exact Matches on name or title

- Module exact matches

- Query is in the package name

- …etc

Then I sum that extra “boost” with the built in rank that FTS5 gives when using the index and the function_rank and module_rank we calculated above. This gives us the base query that I can sort and limit on, while also boosting the VERY close matches. Which is exactly what we do in the final query. The end results is reasonable, and near instant queries for as you type.

Go check it out https://hex-search.fly.dev/!

This code is kinda ugly because it is the output of trial and error. I am deliberately not refactoring some of this so you can see that even experienced developers get frustrated and throw stuff at the wall hoping it would work.

The first version of this query would take entire seconds to search and still came up with terrible results. This kind of goes for all search, no matter what tool you end up using you will end up tinkering with it forever. Users will find a query that “should just work” because expectations are set by Google.

Discussion

My goal with this post was to walk you through my experiences while scratching a personal itch. Real World projects always end up messier than you’d like and that’s just life.

Please reach out and help make this better! Pull Requests are welcome and let me know if it ends up being useful to you, or roast me about how I missed a completely obvious way to approach this problem!